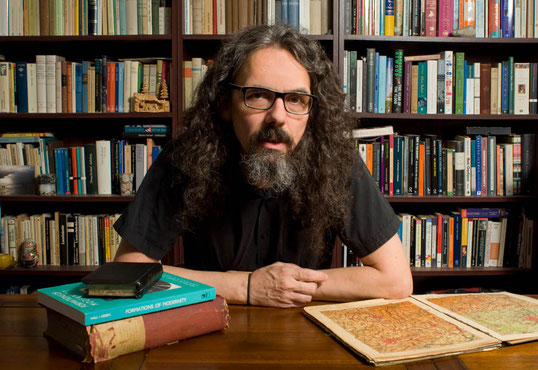

When Graeme Currie was working at a university, he went to the campus library for research and often lingered in the stacks just to enjoy the collection.

Now, as a freelance translator and editor operating remotely from a small town near Hamburg, Germany, Currie doesn’t have that same access. Without an institutional affiliation, he relies on materials in the Internet Archive for his work.

“It’s been vital for me because, at times, it’s the only way I can find what I need,” says Currie, 51, who is originally from Scotland. “For freelancers who are working from home without a library nearby and using obscure sources and out-of-print books, there’s nothing to replace the Internet Archive.”

Currie first heard about the Wayback Machine in the early 2000s as a means to check changes in websites. Then, he discovered other services that the Internet Archive provides including its audio and book library.

“For freelancers who are working from home without a library nearby and using obscure sources and out-of-print books, there’s nothing to replace the Internet Archive.”

Graeme Currie, freelance translator & editor

As he edits and translates academic books from German to English, Currie says he often has to check book citations—looking up page numbers and verifying passages. The virtual collection has been helpful as he researches a range of topics in the arts, social sciences and the humanities. Currie says he’s borrowed titles related to philosophy, criminality and global urban history, including the early history of tourism in Sicily.

Not only are many of the books hard to find, but Currie says logistically, they are difficult to obtain. Without the Internet Archive, Currie says he would have to wait weeks for interlibrary loans or try to contact the book authors, who are often unavailable.

“I simply could not do my job without access to a virtual library,” says Currie, who has been freelancing for about five years. “The Internet Archive is like having a university library on your desktop.”

Learn more about Currie at https://www.gcurrie.de/.