It’s 10 am and I’ve already been traveling for 20 hours — two planes and a long layover from California on my way to Ubatuba, a town 4-hours northeast of Sao Paulo, Brazil. I feel nervous. I’ve never been to Brazil before but the bus ride is serene. The city buildings give way to lush rainforest along the mountainside. It’s almost silent on the bus, a calm quiet. I take a cue from the locals, close my eyes and try to get some rest. I am on my way to DWeb+Coolab Camp Brazil.

View of buildings at Neos Institute where campers found cover from the elements. Photo by: Bruno Caldas Vianna licensed under CC BY-NC-ND 4.0 Deed

My phone buzzes. It’s Victor (Coolab) and Dana (Colnodo). They pick me up from the station and we’re off to Neos Institute, where we’ll spend the next five days together. Coolab Camp is a continuing experiment in the DWeb movement — weaving together technologists, dreamers, builders, and organizers in a beautiful outdoor setting, providing food and shelter for the week, then letting the sharing, imagination, and community building fly.

Gathering on the first day to talk about the themes of agriculture and ecology.

I arrive early to help set up parts of the camp, which is being hosted by the Neos Institute for Sociobiodiversity. They are a collective that has spent the last six years rebuilding this once dilapidated cultural center. One of Neos’ goals is to protect and conserve this area, the Brazilian Atlantic forest. Only about 10% of this forest remains in the wake of development.

This spirit of conservation overlays with the themes of Camp: agriculture, sustainability, and ecology. Coolab is bringing together farmers and organizers from Latin America with DWeb builders and technologists to discover how we can take care of both our digital and physical landscapes.

My roommate, Bruna, from the Transfeminist Network of Digital Care, shares their work on Pratododia. They use the metaphor of food to explain how we can practice healthier technology habits. For instance, just as we wash our hands before meals, it’s important to check our security and privacy settings online before tasting everything the internet offers us.

Papaya, mango, and watermelon served during our vegetarian meals.

Coolab Camp is more than a conference, it is an experiment in building a pop-up community. We start each day with a general meeting at the Casarão (Big House), where we forge acordos (agreements) about how to take care of the space and each other.

Alexandre from Coletivo Neos goes over the history of the Neos Institute.

Coolab Camp morning meetings are at once relaxed and energizing.

These acordos range from simple things: don’t feed the cats and take off your shoes — to strong expressions of our values: no oppression or discrimination of any kind based on class, race, gender, or sexual orientation. At the beginning of every meeting we reiterate these agreements and ask ourselves: do we still agree, does anything need to be changed, does anything need to be added?

This daily gathering is only possible because the event is small, about 80 people over the five days of Camp. That intimacy means we recognize familiar faces and at least exchange a friendly greeting (Bom dia!). There are no janitors to clean up during the event. We wash our own dishes and clean our own bathrooms.

A community member helps setup the mesh network.

Folks also volunteer to be the “olhos (eyes) ” and “ouvidos (ears)” of the community. The Olhos serve to watch out for any misbehavior. The Ouvidos are there to listen if someone has issues they are uncomfortable bringing up to the group. All of this adds to the building of our community.

Marcela and Tomate crafting posters and zines.

How do we communicate at Camp? First, we test some technological solutions like a Mumble server for multiple audio channels, then having AI do live translation. But in the end, the best solution is human: to have another person by our side.

A lot of the Brazilian campers speak both Portuguese and English, so volunteers translate whispering next to us English-only speakers. It is incredibly humbling to have community members put so much energy into making sure we are included in the conversations and know what is going on.

Creating our session schedule through unconference.

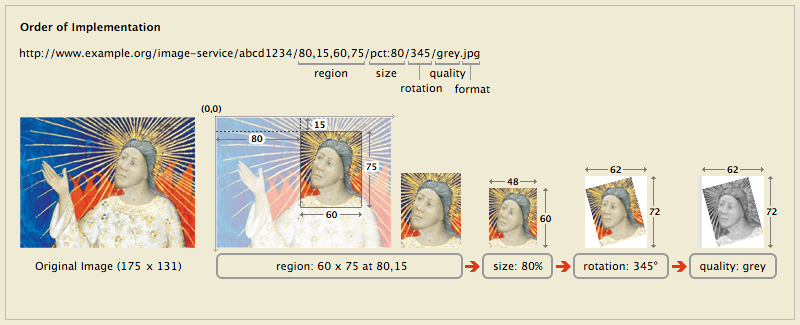

Next comes the fun part, the sessions and workshops! Sessions are organized through an unconference where everyone proposes sessions, determines their interests, and those garnering the most interest place themselves on the schedule. Workshops range from:

- Learning programming with Scratch for kids and beginners

- Working with a mesh network

- ODD.SDK – a local-first framework for app development

- A presentation on Digital Democracy’s Earth Defender’s Toolkit

An analog map of the camp site and where routers for the mesh network will go.

Campers gather around the firepit to share experiences working in cooperatives.

Luandro Viera from Digital Democracy shares the Earth Defender’s Toolkit.

One of my favorite sessions is with Ana, a Brazilian farmer and social researcher guiding us through a game called Sanctuaries of Attention. It happens on the last day. It is impromptu and they just ask around for people to join after breakfast.

Ana is able to lead the session in Portuguese, Spanish, and English. We spend two hours sharing stories of how our attention changes in different situations and which situations feel safe for us — ”sanctuaries” that we can rest in.

The unconference style suits DWeb+Coolab Camp, because it allows the time and space for sessions like these to happen organically, without constraints.

Ana guides participants through the Sanctuaries of Attention.

Nico teaches programming for beginners using Scratch.

Setting up network equipment for the mesh network on site.

Some sessions are discussions around topics like:

- Experiences as a cooperative

- How to organize groups using sociocracy

- Sharing challenges and workarounds managing a community network

- Methods for social exchange of common resources

It doesn’t hurt that we can hold some of these discussions at the beach. There are also plenty of casual conversations over meals, on a couch, or lounging in a hammock.

Discussion on the beach about community networks.

One of the things I’ll keep with me from those conversations is a new way of understanding the saying, “The future is already here — it’s just not evenly distributed yet.”

Those of us from luckier circumstances fret about the end of the world. Those from different circumstances have already seen it happen. Their economic systems have collapsed or their environment is suffering through the worst of the climate catastrophe. The end of the world is already here — it’s just not evenly distributed yet.

But an end is just a new beginning. Here in Brazil, we meet in the forest with people who are already rebuilding, regenerating from the ruins. The contributions we make will remain. Regeneration is already here — it’s just not evenly distributed yet.

Creating improvised music with Música de Círculo around the campfire.

Peixe (Fish) and Ondas (Waves), the spaces where sessions were held.

Farmers, organizers, designers and technologists at DWeb+Coolab Camp Brazil 2023.

We could have been anywhere, but we got the opportunity to be within the songs of the birds, the whispers of the trees, and the laughter of the sea. Within smiles and greetings, warm embraces and supportive shoulders. To all the people who gathered us together: Tania, Hiure, Marcela, Luandro, Victor, Dana, Bruno, Marcus, Colectivo Neos and anyone else I may have forgotten, thank you for showing us how to regenerate culture, environment and technology through community. Obrigado!

All photos by Melissa Rahal licensed under CC BY-NC-ND 4.0 Deed unless otherwise stated.