Internet Archive crawls and saves web pages and makes them available for viewing through the Wayback Machine because we believe in the importance of archiving digital artifacts for future generations to learn from. In the process, of course, we accumulate a lot of data.

Internet Archive crawls and saves web pages and makes them available for viewing through the Wayback Machine because we believe in the importance of archiving digital artifacts for future generations to learn from. In the process, of course, we accumulate a lot of data.

We are interested in exploring how others might be able to interact with or learn from this content if we make it available in bulk. To that end, we would like to experiment with offering access to one of our crawls from 2011 with about 80 terabytes of WARC files containing captures of about 2.7 billion URIs. The files contain text content and any media that we were able to capture, including images, flash, videos, etc.

What’s in the data set:

- Crawl start date: 09 March, 2011

- Crawl end date: 23 December, 2011

- Number of captures: 2,713,676,341

- Number of unique URLs: 2,273,840,159

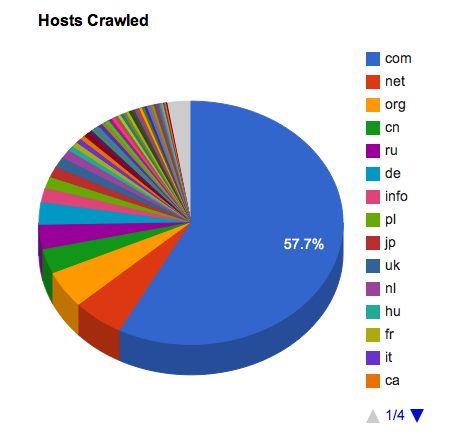

- Number of hosts: 29,032,069

The seed list for this crawl was a list of Alexa’s top 1 million web sites, retrieved close to the crawl start date. We used Heritrix (3.1.1-SNAPSHOT) crawler software and respected robots.txt directives. The scope of the crawl was not limited except for a few manually excluded sites. However this was a somewhat experimental crawl for us, as we were using newly minted software to feed URLs to the crawlers, and we know there were some operational issues with it. For example, in many cases we may not have crawled all of the embedded and linked objects in a page since the URLs for these resources were added into queues that quickly grew bigger than the intended size of the crawl (and therefore we never got to them). We also included repeated crawls of some Argentinian government sites, so looking at results by country will be somewhat skewed. We have made many changes to how we do these wide crawls since this particular example, but we wanted to make the data available “warts and all” for people to experiment with. We have also done some further analysis of the content.

If you would like access to this set of crawl data, please contact us at info at archive dot org and let us know who you are and what you’re hoping to do with it. We may not be able to say “yes” to all requests, since we’re just figuring out whether this is a good idea, but everyone will be considered.

Pingback: 80 terabytes of archived web crawl data available for research | My Daily Feeds

This is awesome and you should feel awesome!

Hi,

I’m a student of , Pilani campus. I’m pursuing my degree in Electrical and Electronics Engineering.

However, I am currently an intern in MuSigma Business Solutions, Bangalore and have familiarized myself with data analysis and am specifically working on Text Mining (Opinion mining and Sentiment mining along with social media analysis to be precise) using .

I am a blogger and run a personal blog which has my musings on the world. It has a small niche of followers and they were all completely unexpected. I’m also interning as a writer for an online magazine.

I’d like to continue working in both these areas on my last semester on campus and do a study on what makes a good blog good. I’d like to study the traffic to personal blogs and see where it comes from – what are the general channels which generate traffic to personal blogs. I hope to someday develop a research paper about the social network structure of the blogosphere which is innante in its nature and rarely mentioned explicitly.

NOTE: The work I hope to do is purely at a personal level 🙂

Hope you like my idea.

Dear Internet Archive

The Concise Model of the Universe is a project which aims to capture everything that interests me and therefore many other people who are also able to add content. Unfortunately the people hosting The Model did not keep backups as promised and have crashed twice leading to a massive loss of information.

I would love to be able to explore your resource to recover lost information and in the process to find information that should be included on The Model.

Thank you for your consideration of my request.

Paul Annear

if it has a url, you should be able to see it in the wayback machine.

I bet you have tried this, but just in case.

-brewster

Pingback: 10,000,000,000,000,000 bytes archived! | Internet Archive Blogs

I would only be interested in this material for genealogical purposes.

Thanks for all of your hard work.

Pingback: The Internet Archive has saved over 10,000,000,000,000,000 bytes of the Web | Digital Gadget dan Selular

Pingback: The Internet Archive Has Now Saved a Whopping 10,000,000,000,000,000 Bytes of Data : Lenned

Pingback: The Internet Archive Has Now Saved a Whopping 10,000,000,000,000,000 Bytes of Data |Trax Asia™

Pingback: The Internet Archive Has Now Saved a Whopping 10,000,000,000,000,000 Bytes of Data | Webmasters' Home

Pingback: The Internet Archive Has Now Saved a Whopping 10,000,000,000,000,000 Bytes of Data | 1v8 NET

Pingback: The Internet Archive Has Now Saved a Whopping 10,000,000,000,000,000 Bytes of Data | genellacoleman.com

Pingback: The Internet Archive Has Now Saved A Whopping 10 Petabytes Of Data | Gizmodo Australia

Pingback: The Internet Archive is now home to 10 petabytes of data - TekDefenderTekDefender

Pingback: Technable | Making you Technically Able

Pingback: » The Internet Archive Has Now Saved a Whopping 10,000,000,000,000,000 Bytes of Data Gamez Menu

I’m developing a meta-research tool that extracts the references from research papers. I’m wondering if the crawl contains any document files & if so, access to them would provide me with:

– a giant corpus to test the extractor and other functions against (especially if any are written in a language other than English)

– a great seed for a bibliometric/biliographic database, as well as global research graph

This would be for a commercial service, but I will be open-sourcing the project under the MIT license. I’m planning on charging $5/mo or less for the service & hope to sell it to one of the companies producing similar research/bibliographic tools (e.g. Zotero).

We would like to use the data to test some of our analytical tools, e.g. text extractions, visualation widgets, etc.

Thank you,

John

Pingback: Internet Archive Celebrates 10 Petabytes « Random Walks

Pingback: The Internet Archive Has Now Saved a Whopping 10,000,000,000,000,000 Bytes of Data : Gadget News

Pingback: Internet Archive: 10 Petabyte im Internet-Museum | Die Hirn Offensive

Pingback: Rob's Personal Aggregator » The Internet Archive Has Now Saved a Whopping 10,000,000,000,000,000 Bytes of Data

Pingback: Arquivo com a história da internet ultrapassa 10 milhões de gigabytes | Micro Ploft

Pingback: Internet Archiv archiviert 10 Petabyte an Daten | MediaCompany Blog - Frisches Webdesign aus Lübeck

Pingback: Internet Archive Now Stores Over 10,000,000,000,000,000 Bytes of the Web | WebTool Plugin For WordPress

Pingback: Morning Toolbox – October 29, 2012 – Post-CSIcon Monday Blues « Skeptical Software Tools

Pingback: Arquivo com a história da internet ultrapassa 10 milhões de gigabytes |

Pingback: Quora

Pingback: Internet Archive, 10 Petabyte di cultura digitale | infropy - information entropy

Pingback: The Internet Archive Reaches A Milestone, Capstone Creations in Louisville, KY

I would love to be able to explore your resource to find lost information about old plc (Programmable logic controller) .I have old machine but i don’t have program,so this would be a great opportunity to find it now.Also i want to know some details about old Personal Computer’s,that i can’t find on internet now.

Thanks for all of your hard work.

Nikolaj

Pingback: A trillion…anything…in your Hadoop cluster is cool » Aaron at the Internet Archive

Pingback: Get Latest News Around The World

Pingback: Lecture des sources historiennes à l’ère numérique | Frédéric Clavert

Pingback: Una copia completa de la Web en 80 terabytes « Noticias Venezuela

Pingback: Finding .ca domains in the 80TB Wide Crawl | Ian Milligan

Pingback: Generating List of Domain-Specific WARC Files to Download | Ian Milligan

Pingback: Internet Archive ahora es el hogar de 10 petabytes de datos | Online

Pingback: Just how big are these WARC files, anyways? | Ian Milligan

Pingback: 80 terabytes of archived web crawl data available for research | Internet Archive Blogs | Shane Cloud

Pingback: Lecture des sources historiennes à l’ère numérique | Frédéric Clavert

Pingback: Exploring 50,000 Images from the Wide Web Scrape, Initial Thoughts | Ian Milligan