I love working with the Internet Archive’s collections, especially the growing book collection. As an engineer and sometimes scholar, I know there’s a lot of human knowledge inside books that’s difficult to discover. What new things could we do to help our users discover knowledge in books?

I love working with the Internet Archive’s collections, especially the growing book collection. As an engineer and sometimes scholar, I know there’s a lot of human knowledge inside books that’s difficult to discover. What new things could we do to help our users discover knowledge in books?

Today, most people access books through card catalog search and full-text search — both essentially 20th century technologies. If you ask for something broad or ambiguous, because you don’t know what you’re looking for yet, any attempt to present a short list of the most relevant results is likely to be overly narrow, not inspiring discovery or serendipity.

For the past few months, I’ve been experimenting with a new way to visualize book contents. This experiment starts with one simple idea: Most sentences contain related things. If I see a concept and a year together in a sentence, the odds are that the two are related. Consider this sentence:

A new, Gregorian Calendar, was introduced by Pope Gregory XIII in 1582.

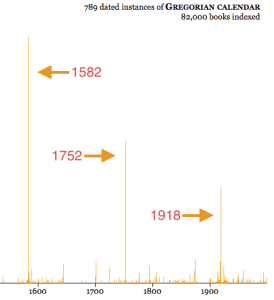

I’ll explain in a minute how I figured out that Gregorian Calendar and Pope Gregory XIII are things, and that 1582 is a year. Given that, what can we learn from the sentence? We can guess that these things and the year are probably associated with each other. This guess is sometimes wrong, but let’s try adding together data from around a hundred thousand books and see what happens:

Three years have a relatively large number of sentences containing “Gregorian calendar” and that year. Are these important dates in the history of the Gregorian Calendar? Yes: in 1582, Pope Gregory XIII had Catholic countries adopt this new calendar, replacing the Julian calendar. In 1752, England adopted it, and in 1918, after the Russian Revolution, Bolshevik Russia adopted it.

Let’s take a look at some of the actual book sentences from the most popular year, 1582:

1582

The Cambridge handbook of physics formulas

The routine is designed around FORTRAN or C integer arithmetic and is valid for dates from the onset of the Gregorian calendar, 15 October 1582.

The Cambridge history of English literature, 1660-1780

In 1582 Pope Gregory XIII (hence the name Gregorian

Calendar) ordered ten days to be dropped from October to make up for the errors that had crept into the so-called Julian Calendar instituted by Julius Caesar, which made the year too long and added a day every one hundred and twenty-eight years.They give year, month, and day in cyclical characters and their equivalent in the Western calendar (using the modern Gregorian calendar even for pre-1582 dates).

The crest of the peacock : non-European roots of mathematics

Clavius was a member of the commission that ultimately reformed the Gregorian calendar in 1582.

You can give the experiment a try at https://books.archivelab.org/dateviz/.

Now that you’ve seen what the experiment looks like, let’s look at some of the details of building this visualization. (The code can be found on GitHub at https://github.com/wumpus/visigoth/.)

We need a way to find dates in sentences. Sometimes it’s obvious that something is a date: “January 31, 2016” or “Jan 2016.” Other times it’s more ambiguous: a 4 digit number might be a year, or it might be a section of a US law (“15 U.S.C. § 1692”), or a page number in a book. What I ended up doing was creating a series of patterns (see https://github.com/wumpus/visigoth/blob/master/visigoth/dateparse.py) that look for English helper words (“In 2016”, “before 1812”) before guessing that a 4-digit number is a date. While this technique has both false positives and false negatives, it works well enough not to hurt the visualization significantly.

The next item is generating the list of things (people, places, concepts, etc.) in a sentence. There are many techniques for doing this, ranging from computationally-expensive machine-learning libraries like the Stanford NER library, to using human-generated lists such as the US Library of Congress Name Authority Files. There’s also the complication of disambiguating things like “John Smith.” (Which “John Smith” of the hundreds do we mean?) To match the simple nature of the other algorithms in this experiment, I decided to use a very simple dataset: English Wikipedia article titles. Not only is this a comprehensive collection of encyclopedic things, but there are numerous human-generated “redirects,” which provide a list of synonyms for most article titles. For example, “Western calendar” is a redirect to “Gregorian Calendar,” and in fact numerous books do use the term “Western calendar” to refer to the Gregorian calendar.

Our next task is ranking. Two aspects of this visualization use ranks. First, the suggestions that come up while users are typing in the “thing” box are ordered by Wikipedia article popularity. Eventually we’ll have enough usage of this visualization that we can use our own users’ data to put suggestions in a better order. Until then, using Wikipedia popularity is a good way to make suggestions more relevant.

A ranking of the books themselves is useful in two ways. First, it’s used to pick which example sentences are shown for a given pair of thing/date. Second, given that I only had enough computational resources to process a fraction of the scanned books in the Internet Archive’s collection, I chose 82,000 books using the same ranking scheme. This ranking scheme doesn’t have to be that good in order to deliver a lot of benefit, so I chose a superficial approach of awarding points to academic book publishing houses, book references in Wikipedia articles, and book popularity data from Better World Books, which is a used bookseller & a partner of the Internet Archive.

What’s the result of the experiment? A relatively simple set of algorithms applied to a small collection of high-quality books seems to be both interesting and fun for users. As a next step, I would like to extend it to include a better list of “things”, and extract data from many more books. In a few years, we might have access to 100 times as many scanned books. By then, I hope to find several other new ways to explore book content.