Today the Internet Archive announces a new initiative to fix broken links across the Internet. We have 360 billion archived URLs, and now we want you to help us bring those pages back out onto the web to heal broken links everywhere.

When I discover the perfect recipe for Nutella cookies, I want to make sure I can find those instructions again later. But if the average lifespan of a web page is 100 days, bookmarking a page in your browser is not a great plan for saving information. The Internet echoes with the empty spaces where data used to be. Geocities – gone. Friendster – gone. Posterous – gone. MobileMe – gone.

Imagine how critical this problem is for those who want to cite web pages in dissertations, legal opinions, or scientific research. A recent Harvard study found that 49% of the URLs referenced in U.S. Supreme Court decisions are dead now. Those decisions affect everyone in the U.S., but the evidence the opinions are based on is disappearing.

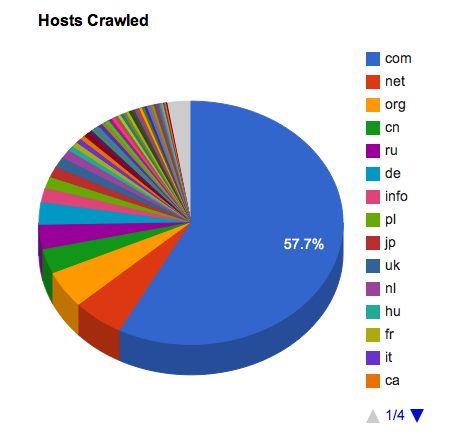

In 1996 the Internet Archive started saving web pages with the help of Alexa Internet. We wanted to preserve cultural artifacts created on the web and make sure they would remain available for the researchers, historians, and scholars of the future. We launched the Wayback Machine in 2001 with 10 billion pages. For many years we relied on donations of web content from others to build the archive. In 2004 we started crawling the web on behalf of a few, big partner organizations and of course that content also went into the Wayback Machine. In 2006 we launched Archive-It, a web archiving service that allows librarians and others interested in saving web pages to create curated collections of valuable web content. In 2010 we started archiving wide portions of the Internet on our own behalf. Today, between our donating partners, thousands of librarians and archivists, and our own wide crawling efforts, we archive around one billion pages every week. The Wayback Machine now contains more than 360 billion URL captures.

FTC.gov directed people to the Wayback Machine during the recent shut down of the U.S. federal government.

We have been serving archived web pages to the public via the Wayback Machine for twelve years now, and it is gratifying to see how this service has become a medium of record for so many. Wayback pages are cited in papers, referenced in news articles and submitted as evidence in trials. Now even the U.S. government relies on this web archive.

We’ve also had some problems to overcome. This time last year the contents of the Wayback Machine were at least a year out of date. There was no way for individuals to ask us to archive a particular page, so you could only cite an archived page if we already had the content. And you had to know about the Wayback Machine and come to our site to find anything. We have set out to fix those problems, and hopefully we can fix broken links all over the Internet as a result.

Up to date. Newly crawled content appears in the Wayback Machine about an hour or so after we get it. We are constantly crawling the Internet and adding new pages, and many popular sites get crawled every day.

Save a page. We have added the ability to archive a page instantly and get back a permanent URL for that page in the Wayback Machine. This service allows anyone — wikipedia editors, scholars, legal professionals, students, or home cooks like me — to create a stable URL to cite, share or bookmark any information they want to still have access to in the future. Check out the new front page of the Wayback Machine and you’ll see the “Save Page” feature in the lower right corner.

Do we have it? We have developed an Availability API that will let developers everywhere build tools to make the web more reliable. We have built a few tools of our own as a proof of concept, but what we really want is to allow people to take the Wayback Machine out onto the web.

Fixing broken links. We started archiving the web before Google, before Youtube, before Wikipedia, before people started to treat the Internet as the world’s encyclopedia. With all of the recent improvements to the Wayback Machine, we now have the ability to start healing the gaping holes left by dead pages on the Internet. We have started by working with a couple of large sites, and we hope to expand from there.

WordPress.com is one of the top 20 sites in the world, with hundreds of millions of users each month. We worked with Automattic to get a feed of new posts made to WordPress.com blogs and self-hosted WordPress sites. We crawl the posts themselves, as well as all of their outlinks and embedded content – about 3,000,000 URLs per day. This is great for archival purposes, but we also want to use the archive to make sure WordPress blogs are reliable sources of information. To start with, we worked with Janis Elsts, a developer from Latvia who focuses on WordPress plugin development, to put suggestions from the Wayback into his Broken Link Checker plugin. This plugin has been downloaded 2 million times, and now when his users find a broken link on their blog they can instantly replace it with an archived version. We continue to work with Automattic to find more ways to fix or prevent dead links on WordPress blogs.

Wikipedia.org is one of the most popular information resources in the world with almost 500 million users each month. Among their millions of amazing articles that all of us rely on, there are 125,000 of them right now with dead links. We have started crawling the outlinks for every new article and update as they are made – about 5 million new URLs are archived every day. Now we have to figure out how to get archived pages back in to Wikipedia to fix some of those dead links. Kunal Mehta, a Wikipedian from San Jose, recently wrote a protoype bot that can add archived versions to any link in Wikipedia so that when those links are determined to be dead the links can be switched over automatically and continue to work. It will take a while to work this through the process the Wikipedia community of editors uses to approve bots, but that conversation is under way.

Every webmaster. Webmasters can add a short snippet of code to their 404 page that will let users know if the Wayback Machine has a copy of the page in our archive – your web pages don’t have to die!

We started with a big goal — to archive the Internet and preserve it for history. This year we started looking at the smaller goals — archiving a single page on request, making pages available more quickly, and letting you get information back out of the Wayback in an automated way. We have spent 17 years building this amazing collection, let’s use it to make the web a better place.

Thank you so much to everyone who has helped to build such an outstanding resource, in particular:

Adam Miller

Alex Buie

Alexis Rossi

Brad Tofel

Brewster Kahle

Ilya Kreymer

Jackie Dana

Janis Elsts

Jeff Kaplan

John Lekashman

Kenji Nagahashi

Kris Carpenter

Kristine Hanna

Kunal Mehta

Martin Remy

Raj Kumar

Ronna Tanenbaum

Sam Stoller

SJ Klein

Vinay Goel

Have you ever clicked on a web link only to get the dreaded “404 Document not found” (dead page) message? Have you wanted to see what that page looked like when it was alive? Well, now you’re in luck.

Have you ever clicked on a web link only to get the dreaded “404 Document not found” (dead page) message? Have you wanted to see what that page looked like when it was alive? Well, now you’re in luck. For 20 years, the Internet Archive has been crawling the web, and is currently preserving web captures at the rate of one billion per week. With support from the Laura and John Arnold Foundation, we are making improvements, including weaving the Wayback Machine into the fabric of the web itself.

For 20 years, the Internet Archive has been crawling the web, and is currently preserving web captures at the rate of one billion per week. With support from the Laura and John Arnold Foundation, we are making improvements, including weaving the Wayback Machine into the fabric of the web itself.

Internet Archive crawls and saves web pages and makes them available for viewing through the

Internet Archive crawls and saves web pages and makes them available for viewing through the