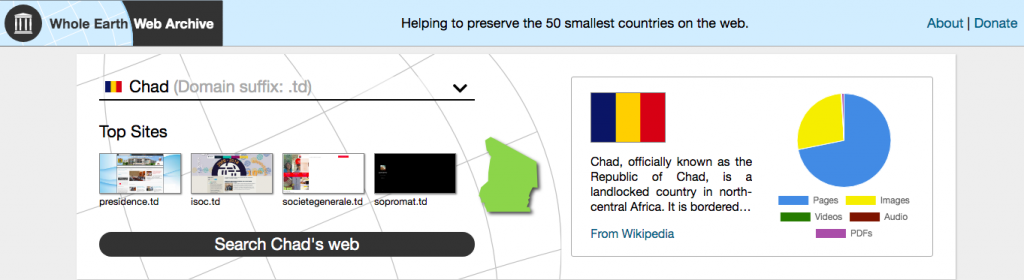

Since 2017, Internet Archive has pursued dedicated technical and partnership work to help preserve and provide perpetual access to open access scholarly literature and other outputs. See our original announcement related to this work and a recent update on progress. The below official press release announces an exciting new multi-institutional collaboration in this area.

The Directory of Open Access Journals (DOAJ), the CLOCKSS Archive, Internet Archive, Keepers Registry/ISSN International Centre and Public Knowledge Project (PKP) have agreed to partner to provide an alternative pathway for the preservation of small-scale, APC-free, Open Access journals.

The recent study authored by M.Laakso, L.Matthias, and N.Jahn has revived academia’s concern over the disappearance of the scholarly record disseminated in Open Access (OA) journals.

Their research focuses on OA journals as at risk of vanishing, and “especially small-scale and APC-free journals […] with limited financial resources” that often “opt for lightweight technical solutions” and “cannot afford to enroll in preservation schemes.” The authors have used data available in the Directory of Open Access Journals to come up with the conclusion that just under half of the journals indexed in DOAJ participate in preservation schemes. Their findings “suggest that current approaches to digital preservation are successful in archiving content from larger journals and established publishing houses but leave behind those that are more at risk.” They call for new preservation initiatives “to develop alternative pathways […] better suited for smaller journals that operate without the support of large, professional publishers.”

Answering that call, the joint initiative proposed by the five organisations aims at offering an affordable archiving option to OA journals with no author fees (“diamond” OA) registered with DOAJ, as well as raising awareness among the editors and publishers of these journals about the importance of enrolling with a preservation solution. DOAJ will act as a single interface with CLOCKSS, PKP and Internet Archive and facilitate a connection to these services for interested journals. Lars Bjørnhauge, DOAJ Managing Editor, said: “That this group of organisations are coming together to find a solution to the problem of “vanishing” journals is exciting. It comes as no surprise that journals with little to no funding are prone to disappearing. I am confident that we can make a real difference here.”

Reports regarding the effective preservation of the journals’ content will be aggregated by the ISSN International Centre (ISSN IC) and published in the Keepers Registry. Gaëlle Béquet, ISSN IC Director, commented: “As the operator of the Keepers Registry service, the ISSN International Centre receives inquiries from journal publishers looking for archiving solutions. This project is a new step in the development of our service to meet this need in a transparent and diverse way involving all our partners.”

About 50% of the journals identified by DOAJ as having no archiving solution in place use the Open Journal System (OJS). Therefore, the initiative will also identify and encourage journals on PKP’s OJS platform to preserve their content in the PKP Preservation Network (PKP PN), or to use another supported solution if the OJS instance isn’t new enough to be compatible with the PN integration (OJS 3.1.2+).

The partners will then follow up by assessing the success and viability of the initiative with an aim to open it up to new archiving agencies and other groups of journals indexed in DOAJ to consolidate preservation actions and ensure service diversity.

DOAJ will act as the central hub where publishers will indicate that they want to participate. Archiving services, provided by CLOCKSS, Internet Archive and PKP will expand their existing capacities. These agencies will report their metadata to the Keepers Registry to provide an overview of the archiving efforts.

Project partners are currently exploring business and financial sustainability models and outlining areas for technical collaboration.

DOAJ is a community-curated list of peer-reviewed, open access journals and aims to be the starting point for all information searches for quality, peer reviewed open access material. DOAJ’s mission is to increase the visibility, accessibility, reputation, usage and impact of quality, peer-reviewed, open access scholarly research journals globally, regardless of discipline, geography or language. DOAJ will work with editors, publishers and journal owners to help them understand the value of best practice publishing and standards and apply those to their own operations. DOAJ is committed to being 100% independent and maintaining all of its services and metadata as free to use or reuse for everyone.

CLOCKSS is a not-for-profit joint venture among the world’s leading academic publishers and research libraries whose mission is to build a sustainable, international, and geographically distributed dark archive with which to ensure the long-term survival of Web-based scholarly publications for the benefit of the greater global research community. https://www.clockss.org.

Internet Archive is a non-profit digital library, top 200 website at https://archive.org/, and archive of over 60PB of millions of free books, movies, software, music, websites, and more. The Internet Archive partners with over 800 libraries, universities, governments, non-profits, scholarly communications, and open knowledge organizations around the world to advance the shared goal of “Universal Access to All Knowledge.” Since 2017, Internet Archive has pursued partnerships and technical work with a focus on preserving all publicly accessible research outputs, especially at-risk, open access journal literature and data, and providing mission-aligned, non-commercial open infrastructure for the preservation of scholarly knowledge.

Keepers Registry hosted by the ISSN International Centre, an intergovernmental organisation under the auspices of UNESCO, is a global service that monitors the archiving arrangements for continuing resources including e-serials. A dozen archiving agencies all around the world currently report to Keepers Registry. The Registry has three main purposes: 1/ to enable librarians, publishers and policy makers to find out who is looking after what e-content, how, and with what terms of access; 2/ to highlight e-journals which are still “at risk of loss” and need to be archived; 3/ to showcase the archiving organizations around the world, i.e. the Keepers, which provide the digital shelves for access to content over the long term.

PKP is a multi-university and long-standing research project that develops (free) open source software to improve the quality and reach of scholarly publishing. For more than twenty years, PKP has played an important role in championing open access. Open Journal Systems (OJS) was released in 2002 to help reduce cost as a barrier to creating and consuming scholarship online. Today, it is the world’s most widely used open source platform for journal publishing: approximately 42% of the journals in the DOAJ identify OJS as their platform/host/aggregator. In 2014, PKP launched its own Private LOCKSS Network (now the PKP PN) to offer OJS journals unable to invest in digital preservation a free, open, and trustworthy service.

For more information, contact:

DOAJ: Dom Mitchell, dom@doaj.org

CLOCKSS: Craig Van Dyck, cvandyck@clockss.org

Internet Archive: Jefferson Bailey, jefferson@archive.org

Keepers Registry: Gaëlle Béquet, gaelle.bequet@issn.org

PKP: James MacGregor, jbm9@sfu.ca